Cheap Affordable House Renderings | Cheap Affordable Residential Renderings

Beautiful 3D. They are more suitable for construction bids.

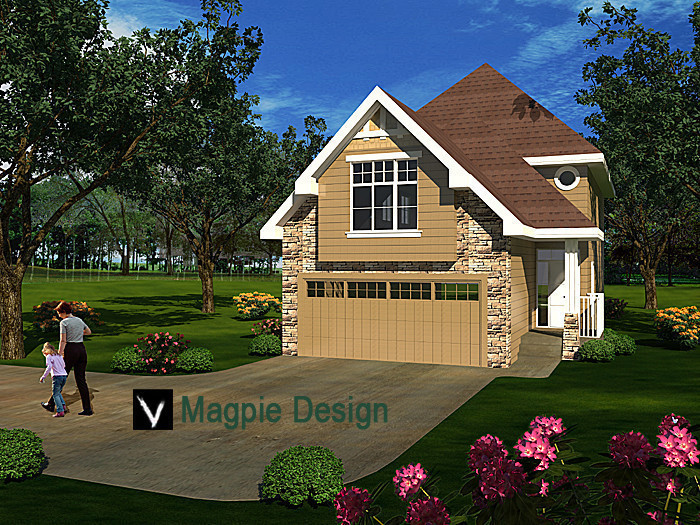

Affordable 3D. They are suitable for average consumer.

Stunning 3D Rendering Services

Photorealistic Architectural Visuals

Elevate Your Design Projects

Transform your ideas with high-quality 3D rendering and architectural visualization. Perfect for architects, developers, and designers. Contact us today!

Get a Quote Now!

Expert 3D Architectural Rendering

Visualize Your Building Projects

Professional 3D Design Studio

Bring your architectural designs to life with our photorealistic 3D renderings. Trusted by architects and real estate professionals. Start now!

Request Your Rendering!

3D Interior & Exterior Rendering

High-Quality Visualization

Perfect for Real Estate

Showcase your interiors and exteriors with stunning 3D renderings. Affordable, professional, and tailored to your needs. Contact us now!

See Your Design in 3D!

3D Rendering for Real Estate

Sell Properties Faster

Photorealistic Visuals

Attract buyers with lifelike 3D architectural renderings for your properties. High-quality visuals for real estate and developers. Get started!

www.magpiesdesign3d.com

Boost Your Listings!

Stunning 3D Rendering Services

Architectural Visualization Experts

Bring Your Designs to Life

Professional 3D rendering and architectural visualization for your projects. High quality and fast turnaround.

Specializing in realistic 3D design and building renderings. See your vision before it's built!

Premium 3D Architectural Rendering

Visualize Your Building Designs

Top-Rated 3D Rendering Studio

Get high-quality 3D architectural renderings for residential, commercial, and real estate projects.

Experience the power of 3D visualization. Contact us today for a free quote!

Although there are a variety of uses for architectural illustration, house rendering is the most personalized use and is often the most appealing to designers. Architectural renderings are used for a large body of projects from extensions, to office parks to complex water slides, but designing a house can also be greatly enhanced by using 3D renderings. There are a variety of factors that go into quality house design and architectural rendering.

House Renderings and the Interior

One of the key elements of 3D architectural rendering is to properly develop the interior of the house. Not only is this one of the most important factors that clients will be searching for, but it is also a major factor that sets house rendering a part from corporate design.

By using 3D rendering to display and design the interior of a home, you will be able to

use features such as flooring, furniture and lighting to make the interior of a 3D rendering to the needs of your residential customers.

Architectrual Renderings for the Home Exterior

In addition to using house rendering to perfect the interior of a home, it can also be used to design the exterior. Often times, house renderings are used to perfect the front yard or a back yard of a residential space.

House rendering can also be used to see the effects of additional rooms or a patio extension. Using architectural rendering before home improvements are attempted have the potential of saving a lot of money and headaches. They can also be used to help with a change in landscaping themes. With a simple exterior 3D rendering, you can see how the landscaping will look without having to buy any product.

Architectural Renderings and Lighting

One of the things that must not be overlooked in architectural renderings is lighting. Without the proper use of lighting, a 3D rendering is likely to look fake and will not be an accurate representation of what the final product will look like. In order to make the most out of images, you must ensure that the lighting is properly configured.

House rendering that includes the correct lighting, both interior and exterior, will make the home’s image come alive. These architectural illustration images will also help you to show what to expect from the finished product.

Best Benefit of 3D Renderings

Perhaps the greatest benefit of architectural rendering is that you will have the ability to customize your images. Not only is this a way for you to court potential clients, but it will also help to retain your residential customers.

By being able to quickly and professionally respond to the needs of your clients through 3D rendering, you will also save yourself a lot of headache. With house rendering, if your client wants to see a virtual design of different rooms within the home, it is easily accomplished. This level of customization is a huge benefit of architectural rendering.

Although many renderings are of large projects, the use of 3D architectural rendering for residential clients is an extremely powerful tool. Using the key elements of house rendering, such as interior, exterior and lighting will help to improve communicating your final design solution.

Home Improvement

Although there are a variety of uses for architectural rendering, house rendering is the most personalized use and is often the most appealing to designers.